The broadcast industry is in the unusual position where for the last five or so years there has been a clear consensus that broadcast production and technology will move to the cloud. However, only baby steps have been made towards that goal. The industry’s approach to making this happen is hugely fragmented with vendors and other institutions looking at the problem solely from their perspective. And it’s getting worse. This blog post looks at the challenges with this increasing fragmentation and why it’s becoming more of a problem for end-users and vendors.

The broadcast industry is in the unusual position where for the last five or so years there has been a clear consensus that broadcast production and technology will move to the cloud. However, only baby steps have been made towards that goal. The industry’s approach to making this happen is hugely fragmented with vendors and other institutions looking at the problem solely from their perspective. And it’s getting worse. This blog post looks at the challenges with this increasing fragmentation and why it’s becoming more of a problem for end-users and vendors.

This blog post is the culmination of experiences as a vendor (admittedly a vendor which sits at the edge) and from numerous conversations with end-users trying, and in many cases struggling, to implement cloud production. Let’s look at the various topics in the field and the challenges they have (which inevitably are leading to slow adoption):

But I’m doing cloud production (with my single-vendor solution)

The main challenges with single-vendor solutions are you are at the behest of the capabilities of that single vendor. That single vendor might be great at certain things such as mixing but they may not be great at other things such as audio processing or video transport.

What end-users want is the ability to connect different vendors together, as they can in SDI or ST-2110, so that they can choose the best mixer, the best audio processor, the best framerate converter, the best encoder etc.

This part is obvious, but the part that isn’t is that this workflow needs to be strictly compatible with a traditional broadcast workflow (not web streaming workflow) on the ground. That means an NAB demo showing RTMP output won’t cut it! RTMP isn’t a video transport format capable of being delivered to a production facility, something we’ve talked about in a previous blog post.

Specialist vendors (and the end-users accustomed to specialist vendors) will have their own qualms with single-vendor solutions but let’s look at things from our perspective, the perspective of an encoder/decoder vendor:

Drifting input streams

Virtually all single-vendor cloud production solutions need to receive multiple feeds such as different camera angles. These come from different encoders and may have different clocks on site. Quickly, the camera angles drift, meaning cuts between angles will repeat the same event (e.g a goal), something unappealing for a viewer. Often this issue is handwaved away with “NTP timecode” and other flawed approaches (there’s no NTP input on a camera). This is because the single vendor is a “jack of all trades, master of none”. In this case they lack the expertise that specialist decoder vendors have built over decades to implement a PLL to manage clock drift between different streams and keep them in sync. Building a good PLL and frame synchronisation algorithm is extremely difficult.

Poor transport stream output

Many cloud production vendors suffer from non-compliant transport stream output. Furthermore, there isn’t an understanding from these vendors why this is a problem. “It’s just SRT” after all (it’s not). We’ve covered in a previous blog post why strict transport stream compliance is important in SRT – it allows the decoder on the ground to reconcile timing between the audio/video coming from the cloud to avoid stutters and audio pops/clicks. Most cloud production systems produce problematic transport stream output that require long latency decoders to hide the issues or sometimes are impossible to fix.

Other issues

One vendor uses WebRTC for monitoring, perhaps not understanding the fact WebRTC regularly stutters owing to limitations in the consumer technology it uses. Again, this stems from a lack of understanding of timing and clock recovery. The issues caused by this are confusing to operators, who will not be sure if an issue is because of the consumer grade transport or something else in the pipeline. There’s a lot to be said about the browser as a control and monitoring platform, but as we’ve seen in other high performance/low-latency industries like gaming, the browser doesn’t cut it.

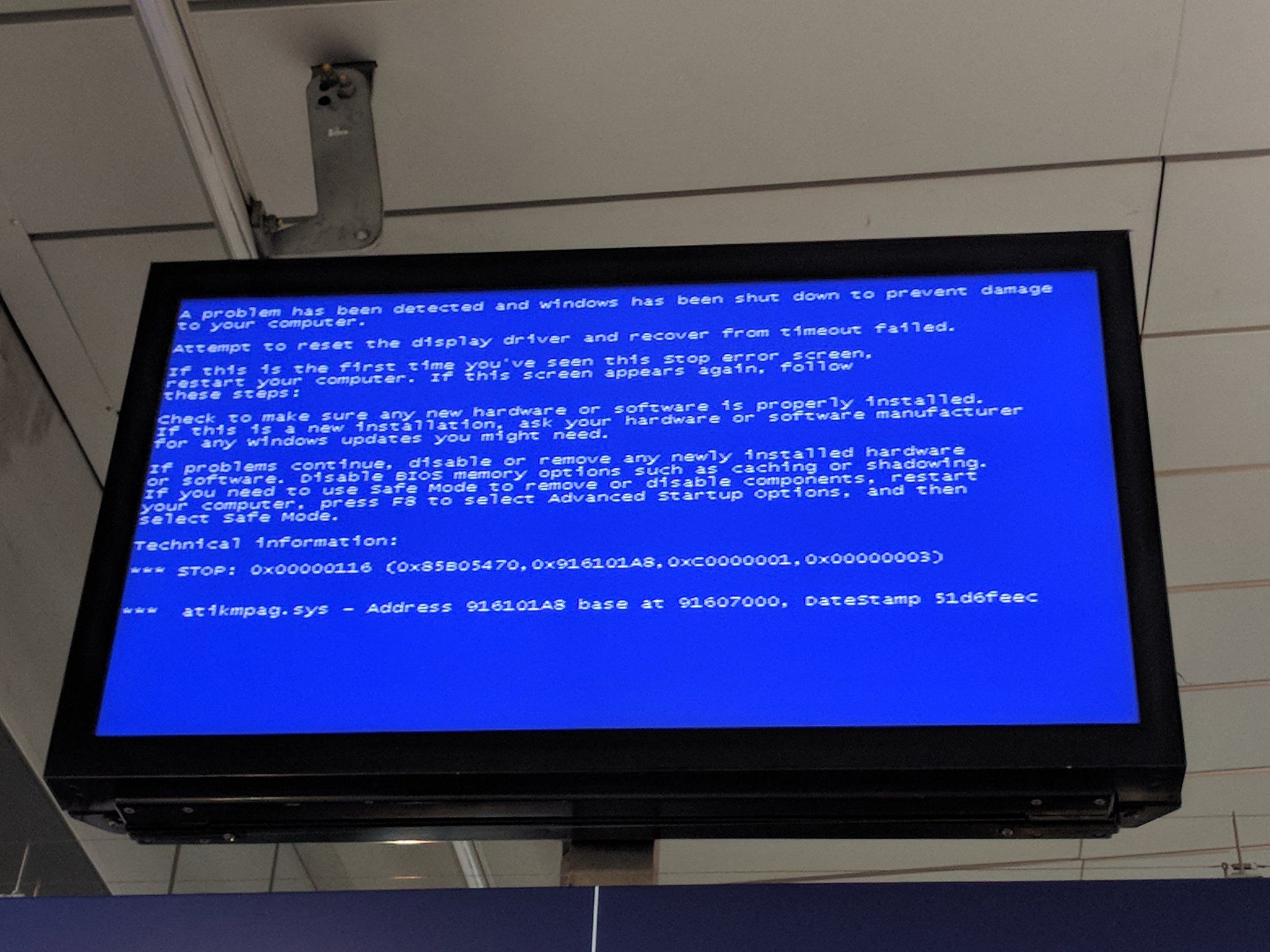

Many vendors also insist on using Windows, a costly endeavour on some cloud platforms and requires particular care from a system administration perspective.

The flaws with single-vendor solutions can be seen immediately, even from the perspective of just one area of technology, let alone the dozens they try to implement. These issues are present in the numerous fields of technology the single-vendor solution tries to implement.

Proprietary transport solves everything!

There are of course methods to interconnect different vendors with proprietary interconnects, most commonly NDI. This has the operational challenge that because it’s proprietary it’s essentially impossible to fault find. This means that frame drops and stutters are impossible to diagnose across a broadcast chain (probably related to the bursty nature of TCP but who knows really). In fact, sometimes different monitors in a facility show different behaviour, panicking operators, because proprietary transport like NDI is nondeterministic (i.e behaves differently depending on the endpoint).

Furthermore, use of proprietary transport creates heavy vendor lock-in, restricting innovation. We all saw how the Internet Explorer 6 web browser accelerated web applications to market in the early 2000s, but then led to decades of vendor lock-in, some of which is still prevalent two decades later! In the long-term it held back innovation (e.g in areas like HTML5), because everyone had to fall back to supporting the legacy capabilities of this browser. Microsoft ruled the world in 2000s.

Operationally and commercially, proprietary transport poses huge risks to vendors and end-users. Managing the multiple different proprietary transport methods in a facility is also exceptionally challenging.

Proprietary frameworks

There are a new set of proprietary frameworks (e.g Matrox Origin) out there that on paper appear to be more open. But in the end, they have the same problems as proprietary transport. Vendors lack the ability to control the pipeline and demarcate responsibility. End-users have the lack of monitoring and transparency to fault-find during a live production. It again makes production dependent on a single vendor.

This weeks RDMA flavour

There are several approaches using Remote Direct Memory Access (RDMA). This is used extensively in High Performance Computing (HPC) where data from one virtual machine (VM) can be accessed by another. In the case of broadcast an uncompressed video frame could leave the VM of one vendor and appear (via some underlying network transport) in the VM of another vendor.

There are plenty of RDMA demos out there but they all are too low level and lack any appreciative understanding of the timing and control plane. For example, what defines if frames are arriving early or late? How do you control these RDMA flows?

Centrally Planned Architecture Cloud Production

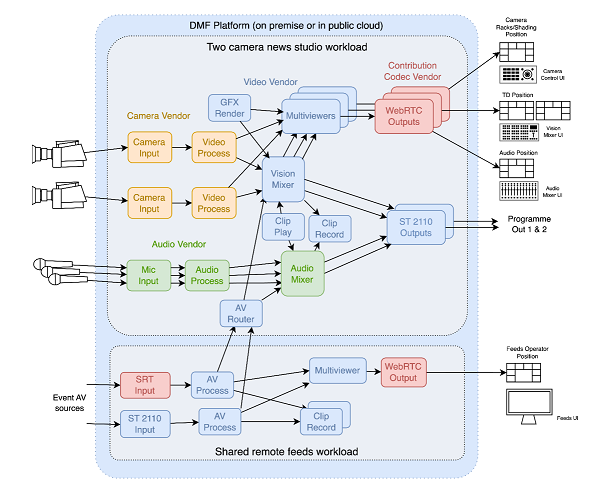

There is also a new “Centrally Planned” approach to cloud production from the EBU known as the Dynamic Media Facility. This consists of a bunch of white papers containing edicts about how some EBU members would like a future media facility to be.

Unfortunately coming along without an understanding of the technology (especially the timing plane) and saying to vendors “you must do this” and presenting a complex architecture isn’t helping the situation. There’s no much point saying you want a Boeing 747 if you haven’t even got a plane that flies reliably yet. WebRTC is also mentioned extensively without a real world understanding of the challenges described above.

These architectural approaches again forget about the real-world requirement that all this needs to integrate with existing ground-based workflows, something conveniently handwaved away in all the documentation. In fact, most EBU members still have SDI facilities and so a timing plane is mandatory.

Industry consensus

It’s clear there is no industry consensus on how to reach the nirvana of multivendor cloud production. There is one glimmer of hope in the form of the VSF TR-11 (Ground-Cloud-Cloud-Ground) API. It presents a vendor neutral API for transporting video between different vendor instances, known as workflow steps. The timing model is rigorous allowing simple interoperation with SDI or ST-2110 workflows. As the timing model is analogous to the VBV in MPEG-TS there is proven expertise in this field. There is an NMOS based control plane (not the best but better than nothing). This is really the only attempt to make a true multivendor cloud production technology stack, that’s free and open source (the API is available on Github).

There is the unfortunate situation that many vendors want a simple SDK they can implement without thinking about the underlying technology, essentially a “lift-and-shift”. This is analogous to forcibly putting an electric engine in a gasoline car and calling it an electric car. It has none of the benefits of cloud-native technology. GCCG is not that, it will require the vendor to understand exactly what their products are doing now that they have been translated to the cloud. The challenge is, of course, convincing vendors to make cloud-native products when 99% of their sales are on-prem.

But it does provide a nirvana for end-users, the ability to spin up a multivendor production in the cloud and have it interoperate with feeds coming from/to the ground.

We need real projects

The other issue that the industry faces is the OPEX nature of cloud technology. This makes it very difficult for vendors to make an investment in technology development if end-users are going to use it for a few hours per year. This chicken-and-egg problem is currently stifling development. COVID forced the industry to use cloud but in the five years since, the industry has sadly made limited progress for the reasons described above.

End-users need to provide real projects with deadlines and real volume. It’s fair to say the 2020 Tokyo Games was the turning point for 2110/NMOS in the industry and cloud production needs a similar milestone. But small proof-of-concepts don’t deliver the commercial nor operational impetus to make things happen.

Conclusions

Single-vendor or proprietary methods of cloud production have hit their glass ceiling as they lack the technical capabilities to meet the needs of productions that are Pro-AV or low end. In the end reaching cloud production will need vendors to talk to each other openly in a non-competitive environment (such as the GCCG) and end-users to encourage this and have real projects with real deadlines. Let’s see what happens.